Data enrichment is like gourmet cooking.

The data you want to analyze are like fresh, raw ingredients. You clean and process it, chopping it into manageable pieces.

You then add external data to enrich the flavor – social media insights, historical trends, and industry news. Stirring and fine-tuning the process, the data comes to life, revealing hidden connections and insights.

The mundane ingredients turn rich and flavorful, ready to be savored and shared. And so it is with data.

The more technical understanding of data enrichment is that it is a critical process in data analysis. It involves enhancing raw data with additional information to improve its quality and value.

As organizations handle increasingly large volumes of data, finding ways to efficiently enrich and extract insights from this data becomes crucial. One of the most promising technologies for this purpose is Leveraging Language Models (LLMs). Let’s explore how they can revolutionize data enrichment.

Understanding data enrichment

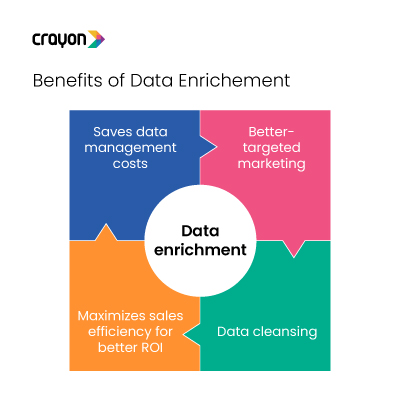

Before diving into the world of language models, we must understand why data enrichment matters. It improves the completeness and accuracy of data, making it more reliable for analysis and decision-making. Common sources for data enrichment include public databases, social media, news articles, and APIs.

However, traditional data enrichment methods have their limitations. Manual data enrichment can be time-consuming and error-prone, and automated methods may struggle with unstructured or ambiguous data. This is where LLMs step in to transform the way we enrich data.

LLMs have been the talk of the town ever since Open AI’s ChatGPT and Google’s Bard were launched. These artificial intelligence models are designed to understand and generate human language. They are built on vast amounts of textual data, which enables them to learn patterns, context, and semantics. They use complex algorithms to predict the likelihood of a word or phrase given its context, allowing them to generate human-like responses and comprehend language more effectively.

Examples of LLMs

Leveraging LLMs for data enrichment

One of the most exciting applications is their ability to enrich data, especially unstructured text data. They can

- Analyze and extract valuable information from unstructured sources, such as social media posts, customer reviews, and news articles

- Understand the context and semantics of the text and identify entities, sentiments, and relationships within the data

- Provide meaningful insights that might have been challenging to obtain using traditional enrichment methods

Some of the data enrichment tasks they can undertake include:

Named Entity Recognition (NER)

Identifying entities like names, locations, dates, and organizations in a given text.

Sentiment Analysis

Determining the sentiment (positive, negative, neutral) expressed in customer reviews or social media posts.

Topic Modeling

Grouping unstructured data into relevant topics to identify trends and patterns.

Relationship Extraction

Identifying connections and associations between entities mentioned in the text.

Advantages of using LLMs in data enrichment

LLMs can process vast amounts of data quickly and accurately, reducing human errors and increasing the quality of enriched data. With their scalability, they can handle massive datasets, making them ideal for organizations dealing with big data.

Plus, compared to manual data enrichment, which requires significant human resources and time, LLMs can perform tasks more efficiently, thus saving costs and time. They can also tackle complex enrichment tasks that may be challenging or time-consuming for traditional methods.

While LLMs hold tremendous potential, they are not without challenges.

They learn from existing data, which might contain biases, leading to biased outputs. Careful evaluation and ethical handling of data are essential to mitigate such biases. They might struggle with domain-specific terms and jargon, affecting the accuracy of enrichment in specialized fields. Additionally, using LLMs on sensitive data can raise privacy concerns, necessitating data anonymization and secure handling.

To maximize the benefits for data enrichment, organizations should follow some best practices. It starts from the very first step. Evaluating different LLMs and choosing the one that best suits their data enrichment requirements is key.

Once that’s done, it is important to preprocess the data and fine-tune the model to align them with their specific use case and data type. LLMs also benefit from continuous learning, so businesses have to keep them updated with the latest data to improve their performance.

Successful implementation of LLMs for data enrichment

Despite the challenges, enterprises have seen success in using these models to enrich their data. Here are a few examples.

Is using LLMs to improve its search results. By understanding the context of search queries, LLMs can help Google to return more relevant results. For example, if someone searches for “how to make a cake,” Google can return results that include recipes, videos, and articles about cake baking.

- In 2021, Google reported that its LLMs were responsible for a 10% increase in click-through rate for its search results.

- A study by Stanford University found that LLMs can improve the accuracy of search results by up to 20%.

Salesforce

Is using LLMs to improve its customer relationship management (CRM) software. By understanding customer data, LLMs can help Salesforce to provide more personalized and relevant recommendations to customers. For example, if a customer has recently purchased a product, Salesforce can recommend other products they might be interested in.

- A study by Gartner found that companies that use LLMs to personalize their CRM software can see a 15% increase in customer satisfaction.

- A study by McKinsey found that LLMs can help companies to increase their sales by up to 10%.

Banks

Are using LLMs to combat fraud. By understanding patterns in financial transactions, banks can identify fraudulent activity. For example, if there is a sudden increase in transactions from a particular account, it could flag the account for further investigation.

- A study by the Association of Certified Fraud Examiners found that LLMs can help banks to detect fraudulent transactions with 95% accuracy.

- A study by the Federal Reserve Bank of New York found that LLMs can help banks to save $1 billion per year in fraud prevention costs.

Healthcare organizations

Are using LLMs to improve patient care. By understanding medical data, healthcare organizations can make better diagnoses and treatment decisions. For example, an LLM could help a doctor to identify a rare disease in a patient by analyzing the patient’s medical history and symptoms.

- A study by the Mayo Clinic found that LLMs can help doctors to make better diagnoses with 80% accuracy.

- A study by the University of California, San Francisco found that LLMs can help doctors to identify rare diseases with 90% accuracy.

E-commerce companies

Are using LLMs to personalize product recommendations. By understanding customer preferences, LLMs can help e-commerce companies to recommend products that customers are more likely to be interested in. For example, if a customer has recently viewed a pair of shoes on an e-commerce website, they could recommend other shoes that the customer might like.

- A study by Amazon found that its LLMs are responsible for a 20% increase in product sales.

- A study by eBay found that its LLMs are responsible for a 15% increase in customer satisfaction.

As technology advances, LLMs are expected to become even more powerful and versatile. They will play a vital role in the future of data enrichment, enabling businesses to gain deeper insights and make data-driven decisions with greater confidence.