As technology evolves on a day-to-day basis, it combines multiple facets to create ingenious results. We’re seeing Generative AI being incorporated into wearables. IoT is converging with machine learning and AI. As is edge computing.

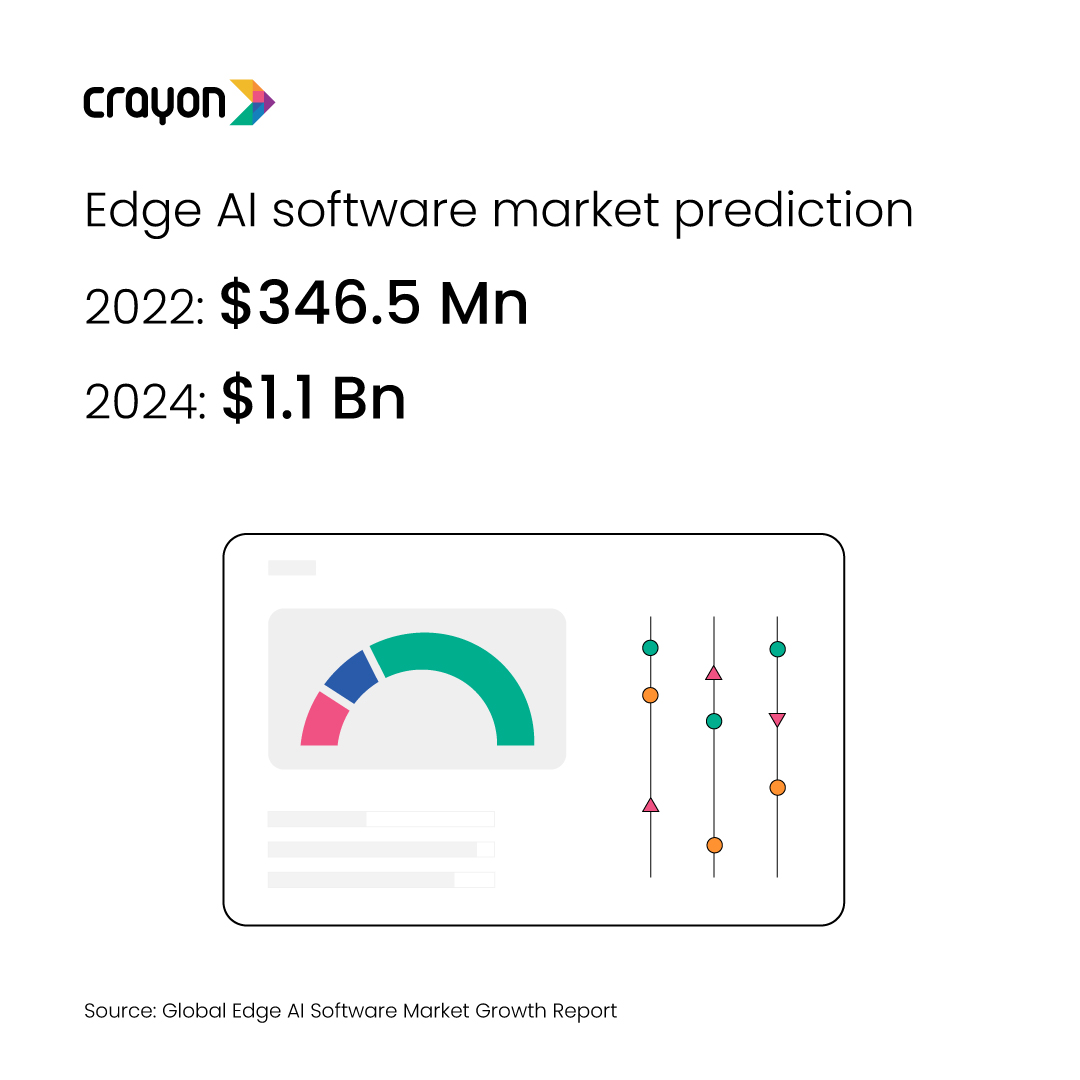

In fact, multiple predictions are touting Edge AI as a major player in 2024. Before we get into the details, let’s cover some basics.

What is Edge Computing?

Edge computing is like having tiny smart brains right where the action is – closer to your devices. Instead of sending all your data to a faraway cloud, it processes stuff locally. Plus, it’s super private and secure because your data mostly stays on your gadgets. That’s why edge computing is the secret sauce behind things like instant video streaming, quick responses from your smart speakers, and even the snappy reactions of self-driving cars.

It’s an exciting concept with the promise of faster data handling and improved privacy. Now, picture teaming this up with the mighty computational abilities of AI. It feels like a match made in tech heaven, offering a path to address data privacy concerns and supercharge processing speed.

In 2022, the edge computing market alone increased by 14.8% year-on-year to reach $176 Bn. This strong growth is expected to carry into 2025. Spending is predicted to reach roughly $274 Bn. Combined with AI capabilities, the potential only grows larger.

The overall benefits of Edge computing for AI

Latency & Speed: Edge computing is like a time wizard for data. In scenarios where milliseconds matter, like autonomous vehicles or fast-paced manufacturing, data needs to be processed lightning-quick. Staying local eliminates the dreaded lag, making your data relevant, useful, and ready for action. It also eases the traffic jam on your enterprise network, boosting performance.

Cost-Efficient: Not all data is gold, and Edge computing knows it. It helps you sort your data into categories, so you’re not burning money on transporting, managing, and securing less valuable stuff. By keeping data at the edge, you save on pricey bandwidth and reduce data redundancy, which means fewer dollars down the drain.

Higher Scalability: Edge computing isn’t playing against the scalability rulebook; it’s just being smarter. Rather than waiting for data to reach a far-off data center, it lets you set up IoT devices and data tools right where you need them. No more costly coordination or expanding data centers.

Improved Security: Centralized data flows can be a hacker’s playground, but Edge Computing throws in a plot twist. By distributing data analysis tools, you spread the risk. Less data in transit means less for prying eyes to intercept. Plus, it’s like a security shield for data on the go.

Increased Reliability: In the wild world of IoT, where connectivity is as fickle as the weather, edge devices save the day. They can handle data locally, even when the internet’s playing hide-and-seek. And in the face of growing data demands, it keeps operations steady.

Real-Time Analytics: Edge computing is the speed demon of data analysis. In the blink of an eye, it makes real-time decisions, ideal for situations where timing is everything. Picture a factory where machines need to work together flawlessly. Edge Computing ensures they don’t miss a beat, avoiding costly errors down the line.

Understanding the inner workings of Edge AI

In the world of Edge AI, the process starts with training a model for a specific task using a suitable dataset. Training the model essentially means teaching it to identify patterns within the training dataset. This trained model is then evaluated using a separate test dataset to ensure it performs well on data it hasn’t seen before, but which shares characteristics with the training data.

Once the model is primed and ready, it’s put into action, or as we call it, “put into production.” This means it’s now available for inference in a particular context, often functioning as a microservice. The model operates via an API, and its endpoints are like doors that can be knocked on for predictions. When input data is sent to these endpoints, the model processes it and returns predictions, which can be further used in other software components or even displayed on an application’s frontend.

It’s no wonder then that Edge AI is being used across industries.

Let’s explore three areas that it is making its presence felt.

1/ Finance:

Fraud detection: To analyze transaction data in real time to detect fraudulent transactions. Fintech major PayPal is using edge AI to detect fraudulent transactions before they are completed, which is helping to protect its customers from fraud.

Customer service: Improve customer service. JPMorgan Chase is using edge AI to power its chatbots, which can help customers with a variety of tasks, such as transferring money and opening accounts.

2/ Manufacturing:

Predictive maintenance: To analyze sensor data from machines to predict when they are likely to fail, so that maintenance can be scheduled proactively. This can help to reduce downtime and improve production efficiency. GE is using edge AI to predict when aircraft engines are likely to need maintenance, which is helping airlines to save millions of dollars each year.

Quality control: To inspect products for defects in real time. This can help to improve product quality and reduce waste. Tesla is using edge AI to inspect its cars for defects as they are assembled on the production line.

Process optimization: To optimize manufacturing processes in real time. This can help to improve efficiency and reduce costs. For example, Siemens is using edge AI to optimize the production of steel, which is helping steelmakers to reduce their energy consumption and CO2 emissions.

3/ Retail:

Cashierless checkout: to identify customers and their purchases without the need for cashiers. This can help to speed up checkout times and reduce labor costs. Amazon Go stores use edge AI to allow customers to shop without having to scan their items or go through a checkout line.

Personalized recommendations: To analyze customer data to provide personalized recommendations. This can help to increase sales and improve the customer experience. Netflix uses edge AI to recommend movies and TV shows to its users based on their viewing history.

Theft prevention: To detect theft and other criminal activity in stores. This can help to reduce losses and improve security. For example, Walmart is using edge AI to analyze video footage from its stores to detect theft and other suspicious activity.

The concerns surrounding Edge AI

Yet, while this combination of edge computing and AI sounds fantastic, we should keep our feet on the ground. The real-world implementation might not be as simple as it seems.

We can’t talk about AI without addressing the big ethical concerns. As AI models become more complex, we need to think more carefully about how we use data, how we make sure AI is fair, and how we can explain how AI makes decisions. These issues don’t disappear when we use AI at the edge of the network. In fact, they can become even more complex.

Here are some possible ethical issues with using AI at the edge:

Variability in decision-making: If each AI model learns and adapts based on its own experiences, this could lead to different AI models making different decisions in the same situation. For example, one autonomous car might be very cautious and brake often, while another car might be too aggressive and not brake enough. This could be dangerous.

Security and data tampering: Since data is processed locally, there may be different levels of security implemented across individual devices. This could make it easier for hackers to steal data or tamper with data, which could affect the AI’s decision-making.

Bias: If each decentralised AI model learns from its local environment, it could develop biases based on that data. For example, a car frequently operated in a suburban environment might not perform well in a densely populated urban setting.

Ethical drift: If each AI model updates itself continuously based on local data, there’s the risk of “ethical drift,” where the system’s decision-making criteria could evolve in unforeseen or undesirable directions over time.

Jurisdictional challenges: Different jurisdictions may have different rules for AI and machine learning. A decentralized AI model makes it more difficult to apply and enforce these rules, especially when an AI-powered device crosses from one jurisdiction into another.

By decentralizing AI, we’re essentially allowing for a multitude of independently acting and evolving AI models. While this has its advantages, it also introduces these and potentially other complexities that need to be carefully considered.

So, will Edge AI be the cornerstone of tech innovation in 2024? While the potential benefits are undeniable, we should remain conscious of the ethical and practical implications that come with decentralizing intelligent systems.

Want to learn more about the world of AI? Head to #TheAIAlphabet series.

![Slaves to the Algo: AI podcast by Suresh Shankar [Season 1]](https://crayondata.ai/wp-content/uploads/2023/07/AI-podcast-by-Suresh-Shankar.jpg)

![Slaves to the Algo: an AI podcast by Suresh Shankar [Season 2]](https://crayondata.ai/wp-content/uploads/2023/08/version1uuid2953E42B-2037-40B3-B51F-4F2287986AA4modecompatiblenoloc0-1.jpeg)